[Editor’s Note – the author of this post, Robin Ren, is the CTO of XtremIO]

Zero blocks are data blocks…, well, filled with zeros. But how your array treats them can make a big difference…

Why zero blocks are special

In many operating systems, when you initialize a disk or a volume, you fill it with blocks of zeros. Because it is such a common storage operation, VMware has a specific VAAI block-zeroing verb to offload this operation to the underlying storage array. Instead of the host getting involved in writing every single zero block, it simply asks the array to do the zeroing on its behalf. This makes the preparation of, for example, a multi-TB thick-eager-zero virtual disk much faster.

Zero blocks are also significant for flash drives, for a completely different reason. Flash has a peculiar behavior — you can write an individual 4K page only if it is zero (“clean”). What happens when you need to overwrite a non-zero (“dirty”) page? The answer is you cannot, unless you reset or erase the page to zero first. But you cannot erase individual 4K pages. You have to erase an entire group (128 or more) of such pages, called an ‘erase block’, together. This is both time and energy consuming because flash erases and writes are far slower than reads. To mask this effect from the host operating system, today’s SSD controllers virtualize the internal physical pages and use extra over-provisioned pages to park the new writes, while constantly performing SSD-level garbage collection in the background to gather all the invalidated pages together such that block erases may be performed asynchronously, without sapping performance. This allows the SSD to optimize for future writes.

The bottom line: writing zero blocks to (or erasing existing blocks from) SSDs is a resource-intensive operation. So the question is: how can a storage array be designed so these operations can be avoided or minimized?

Conventional flash arrays do not have special treatment for zero blocks

Although zero blocks are special, most storage arrays do not treat them differently than any other non-zero data blocks. They take the same amount of space for data and metadata, require the same processing overhead, and get the same protection from RAID, replication, etc. This is because most block storage arrays are not content-aware. They deal with LUNs and LBAs, and faithfully store and recall data blocks, without paying attention to what each block actually contains.

Figure 1. How traditional arrays treat zero blocks. Here 24KB of zeros are stored on the array in six successive 4KB pages.

On the other hand, with a content-based storage engine, an XtremIO array can tell a zero block apart from others. This leads to some very interesting optimizations that are nothing short of magic.

Zero blocks are Xtra-special in XtremIO

Remember that XtremIO has global inline deduplication, which means that no matter how many times a specific 4KB data pattern is written to the array, there is only ever one copy of it stored on flash in the array. You can imagine for all those logical 4KB zero blocks, there would be mappings from their logical addresses (LBA) to the same unique fingerprint for all zero blocks. And the fingerprint would be mapped to the single zero block stored on SSD. This is shown in Figure 2.

Figure 2. How other arrays with deduplication treat zero blocks. A metadata entry for each LBA containing zeros maps to a single zero block stored on flash. The zeros don’t take up space, but the metadata still consumes memory.

XtremIO saw unique opportunities to leverage our content-aware architecture to be even more efficient than this. Because zero blocks are such a special and commonly used data pattern in so many applications, we have special optimizations for it.

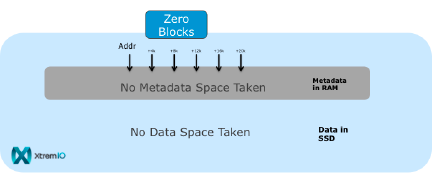

XtremIO arrays do not actually store any zero blocks at all – not even a single unique copy. We make no record of zero blocks in either our metadata or data layers. Yep — zero, nil, naught. Let me explain.

When a host writes a zero block to an XtremIO array at a certain LBA, we immediately recognize this is a 4KB block filled with zeros — because all zero blocks have the same unique content fingerprint which is well known by the array. Upon identification of this fingerprint, we immediately acknowledge the write to the host without doing anything internally. We do not record the LBA to fingerprint mapping, nor do we write the zero block to the flash media.

Figure 3. Writing Zero Blocks to XtremIO consumes no metadata nor data space

Writing is the easy part. But any storage has to be able to retrieve data faithfully upon subsequent reads. How does XtremIO do this when there is no record of the zero blocks written to the array at all? When the host does a read operation at an LBA that was previously written with a zero block, the XtremIO array will not find any mapping in the LBA-to-fingerprint metadata. In such a case, it simply returns a zero block to the host. Done. Problem solved.

As you can see, the read and write operations of zero blocks are hyper efficient, without the need to record metadata or write or read data on the SSDs. The result is super fast handling of zero blocks, and no SSD wear-and-tear.

Some of you may ask if there are any potential issues with taking such an optimization. This has actually been thought out very carefully. If the host tries to read an LBA at which a non-zero block was written previously, any subsequent read operation will find a record in the array’s LBA-to-fingerprint metadata. Then from the fingerprint-to-physical mapping, the array will be able to find the original non-zero block and return it to the host faithfully.

Now the tricky question — what happens when the host tries to read an unknown LBA, one that has not been written previously, or has been written but later deleted (technically unmapped)? In this case, no mapping can be found in the LBA-to-fingerprint metadata. The XtremIO array will return a zero block! But this is exactly the correct and expected behavior for a fully initialized and zeroed-out LUN or volume.

What happens if the host tries to read an unknown LBA on an uninitialized volume? Well, since it is uninitialized, reading an unknown LBA will give the host undefined data. Returning zero blocks is as good as, and indeed safer than, returning any random data.

The bottom line: XtremIO has all the right behavior for zero blocks at all times. Since zero blocks are indeed used a lot in databases, virtualized servers and desktops, this optimization brings great performance gains, superior capacity utilization, and a much longer flash lifecycle.

Furthermore, XtremIO arrays are inherently thinly provisioned. When the host allocates a thick-eager-zero virtual disk with VAAI block zeroing, the XtremIO array still thinly provisions the space, starting with absolutely no consumed SSD space at all! The preparation or initialization of such an EZT disk is super-fast because it is all metadata operations as a result of writing zeros. With every written unique 4KB block, exactly 4KB of space is incrementally consumed. So you get the best of both worlds — the most efficient flash consumption with full inline deduplication and thin provisioning benefits, with no run-time overhead of lazy-zero or thin-format virtual disks on the ESX hosts. XtremIO is virtually thick to ESX but physically thin in the array. Pretty cool — saved by zero.

And there’s more magic in XtremIO’s support for the VAAI unmap/space-reclamation verb. When the ESX host issues an unmap command, the specific LBA-to-fingerprint mapping is erased from the metadata. The reference count of the underlying block corresponding to that fingerprint is decremented. When a subsequent read comes for that erased LBA, the XtremIO array will return a zero block (assuming the reference count was decremented to zero) because the entry no longer exists in the mapping metadata. There is no need to immediately erase the now-de-referenced 4K block on SSD, avoiding the expensive flash erase overhead we talked about earlier. Again, this is all metadata operations, and does not involve the SSD at all.

What does it mean for you?

All this special handling of zero blocks in the XtremIO array means much faster performance and much better flash endurance. When EMC quotes performance numbers for XtremIO arrays, we use what we call “functional IOPS” meaning that we’ve completely preconditioned the entire capacity of the array, we run the arrays very full, we test with unique data (the worst case for performance) and use completely random patterns over large LBA ranges. We make sure that there are no zero blocks in our test patterns (in fact we had to build our own test tools to do this since some common tools only send blocks of zeros) that could juice our performance claims. The bottom line is that in real-world production settings our customers typically exceed our performance claims because there is some percentage of zero blocks in real data streams (and because we specify our “functional IOPS” performance very conservatively). If you want to see this in action, simply use VMware to clone a typical VM on XtremIO. You’ll see amazingly fast cloning performance because of our in-memory metadata architecture, and even faster (10GB/s per X-Brick is not uncommon) performance when the cloning operation gets to the empty zero space toward the end of the VM.

Saved by zero… Xpect More!