Walter Isaacson:

The most important thing that Evangeline Adams would want you to know about her was that she was an Aquarius. In the late 19th and early 20th centuries, she was by far the most famous fortune-teller in America, and her client list included some of the richest and most powerful people in the country. Adams was born at 8:36 AM on February 6th, 1868 when Saturn was in the ninth house. She began studying astrology in Boston at age 19 and a few years later moved to New York, hoping to earn a living predicting the future.

But at the time, it was illegal to practice astrology in New York City. Along with reading tea leaves and palm readings, astrology was considered a black art. It was not something that respectable Americans paid attention to, so finding a hotel where she could live and work was a challenge. Finally, Warren Leland, the proprietor of the Windsor Hotel in Manhattan, took Adams in and even agreed the letter read his horoscope, and what she saw gave her cause for worry. She predicted a terrible calamity on the horizon, but Leland chose to ignore her warning, and the next day, the Windsor Hotel was destroyed by an intense fire.

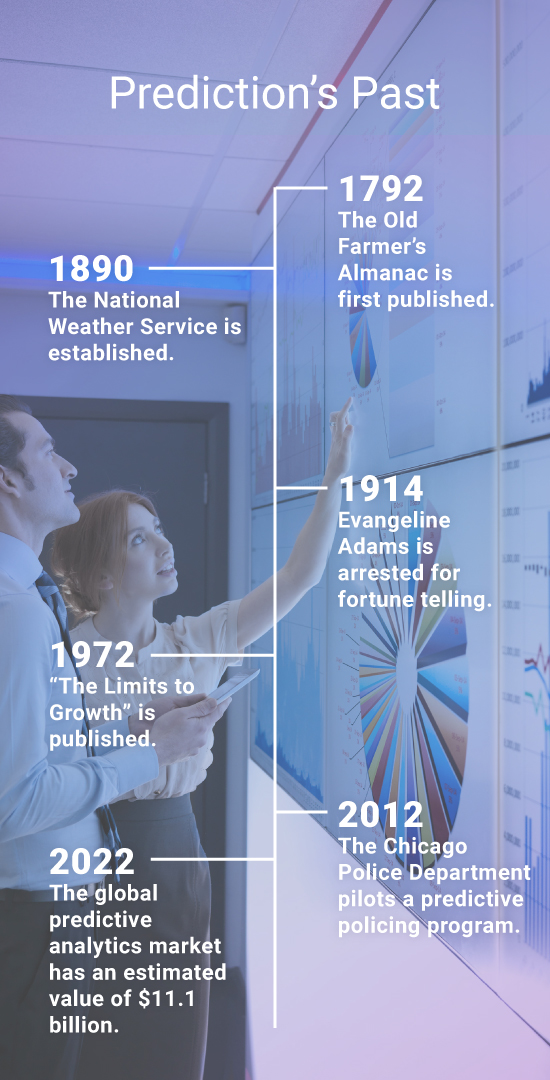

The newspapers seized on the story, and Adams became an instant celebrity. But the New York police were less than impressed with Adams’ clairvoyant powers. In 1914, she was arrested for her fortune-telling. But Adam saw this unfortunate tone of events as an opportunity. At the trial, she asked the presiding judge for the exact date and time of his son’s birth. She was able to provide such an accurate description of the young boy that the judge acquitted her, saying that she had raised astrology to “the dignity of an exact science.”

Now able to practice legally, Evangeline Adams rented space on the 10th floor of Carnegie Hall. Her fame and fortune grew. Among the people who came by her office were King Edward the Seventh of Britain, actress Charlie Chaplin and Mary Pickford, US Senators and Wall Street tycoons like the banker JP Morgan. She became known as the Seer of Wall Street. Millionaires don’t need astrologers, JP Morgan once said, but billionaires do. By 1930, she was receiving 4,000 letters a week and had a nationally syndicated primetime radio program. Two years later, she died, and apparently she had predicted that too.

Evangeline Adams had many skeptics who insisted her predictions were not nearly as accurate as she claimed, but that didn’t diminish her popularity. Wanting to know the future is a very human instinct shared by the poor and billionaires alike. That’s especially true when times are turbulent and the present can seem incomprehensible. Today, the prediction business has never been hotter, thanks largely to advances in artificial intelligence that allow data scientists to make predictions about everything from crime and disease to what movie will want to stream next, and Evangeline Adams probably could have predicted all of it.

I’m Walter Isaacson and you’re listening to Trailblazers, an original podcast from Dell Technologies.

Speaker 2:

Who are the people who are most likely to succeed?

Speaker 3:

Obviously, prediction is not an exact science.

Speaker 4:

Now I’m going to tell you something that happened to you when you were six years old.

Speaker 5:

You are about to take a journey out of this world into the world of the future. You are entering futurama.

Walter Isaacson:

Astrology was not the only kind of prediction that Americans turned to in the late 19th century. The stars might help them predict their personal fortunes, but that wasn’t enough. Farmers demanded accurate weather predictions and crop forecasts, and manufacturers needed to be able to predict market demand.

Jamie Pietruska:

One of the things that is most striking to me about this period is the way in which predictions became part of daily life.

Walter Isaacson:

Jamie Pietruska is the author of Looking Forward, Prediction and Uncertainty in Modern America. In the 19th century, predictive tools were rudimentary and the results were hard to trust, and that contributed to what Pietruska calls a crisis of uncertainty.

Jamie Pietruska:

There were new cultures of professional expertise around those forecasts, and there was also unprecedented public demand for forecasts at the same time that there was also a lot of skepticism. So forecasting in this period became more commonplace, it became more complex, but it also became controversial. Controversial because there was this tension between the expectation that you could know the future with some degree of certainty and the reality that forecasts are not always accurate.

Walter Isaacson:

In the 19th century, government agencies such as the National Weather Service and the US Department of Agriculture promoted predictions based on science, but many Americans remained unconvinced. The weather service, which began in 1890, had to compete with the continuing appeal of forecast from the Farmer’s Almanac, first published in 1792 and a staple of supermarket checkout counters today. But by the first decades of the 20th century, attitudes towards predictions and certainty were beginning to change.

Jamie Pietruska:

It is a shift away from this idea that knowledge is fixed and moving toward an idea in which chance and uncertainty are really inescapable. Uncertainty became understood as a feature rather than a bug, so it was a feature of prediction rather than a failure. So we see in weather forecasting and agricultural forecasting in the judicial system that had to deal with the policing and prosecution of fortune-tellers, we see this gradual shift toward accommodating more chance and uncertainty in a way that the forecasters of the late 19th century had not done.

Walter Isaacson:

By the late 1920s, people’s interest in predicting the future started to intensify. That was largely because technological achievements were changing daily life at such a rapid pace that for the first time in human history, it was clear that the not too distant future would look quite different from the recent past. Most predictions fell flat, but some were surprisingly accurate. Scientist J.B.S. Haldane wrote a book called Possible Worlds where he predicted wind farms and hormone replacement therapy. Famed science fiction writer H.G. Wells foresaw space travel, nuclear weapons, and something resembling the worldwide web, but without the age of computers, these predictions, even those that hit the mark, were still only the imagined futures of visionary thinkers. There was no data or number crunching to back them up.

By the 1960s, concerns about the future of the planet sparked demand for more reliable predictive models, and luckily, even the rudimentary computer technology of the day meant that was now in the realm of possibility. For the first time, people began to see the planet as a giant interconnected ecosystem. Damage to one part of the system would have consequences elsewhere.

Bill Behrens:

It was not a very well-developed field, but when I looked at all of the possible areas of study, the environmental area, and particularly the nexus of environmental and economic and social issues was extremely interesting to me personally.

Walter Isaacson:

This is Bill Behrens. In the late 1960s, while studying at MIT, Behrens met a pioneering computer engineer named Jay Forrester. At the time, scientists lacked tools to predict exactly what the consequences of environmental degradation might be, but that changed in 1972 with the publication of The Limits to Growth. Written by four MIT researchers, including Behrens and Forrester, the book was commissioned by a group of European industrialists called The Club of Rome. It drew heavily on Forrester’s work, which developed a new model from making decisions when faced with complex dynamic systems. It was called System Dynamic Modeling. It was based on the idea that everything is connected and nothing exists in isolation.

Forrester originally designed the model to help company managers understand the long-term impact of their decisions, but he soon realized it could be applied to larger systems, including the large dynamic system known as Planet Earth. In 1970, Jay Forrester was invited to meet with the Club of Rome.

Bill Behrens:

He gets challenged to think about the interactions among the big picture items, the population, the capital growth, those kinds of things on a global basis. He has a very interesting meeting over there and on his flight back, he literally takes out a envelope and a pencil and starts sketching the relationships between these sort of large global descriptors of our economic and social system.

Walter Isaacson:

Forrester’s original model indicated that if the interaction between these big picture items didn’t change, there would be drastic consequences in the not so distant future.

Bill Behrens:

His original model showed a behavior mode that in about 2050 would result in significant declines in global population, food stability, environmental safety, capital accumulation. In other words, it showed a behavior mode where the world really went to hell about 70, 80 years into the future. What he did is he put that up on screen at the Club of Rome and said, “We have to figure out whether this is real or not. This is so compelling that we need to understand what’s going on here.”

Walter Isaacson:

Behrens and his co-authors used Forrester’s model to produce 12 computer-based scenarios showing how resource depletion, population growth, industrial output, food production, and pollution would influence each other under various policies that governments might adopt. In the first scenario, a failure to do anything could lead to societal collapse in the first half of the 21st century. Both politicians and economists thought the book’s dire predictions were way off base, but Behrens believes this is because critics didn’t understand one important difference; the difference between predicting and modeling.

Bill Behrens:

I think that’s a really important distinction. Predicting is a game that people play when they actually think that they can understand how the world works. Modeling is an attempt to put down on paper how we think the world works and how we think it will develop over time. But whenever people would say, “Well, how can you make this prediction of the future?”, Our response would always be, this is not a prediction. This is an explanation of how decisions are being made right now. If decisions continue to be made in the future, it is likely that the scenario will follow the pathway that we’re showing. That’s the point of making the model. The point is not to say what’s going to happen 75 years in the future. The point is to bring it back to the present and say, “How do our decisions now affect what is likely to happen in the future?”

Walter Isaacson:

The Limits To Growth sold 12 million copies and it was translated into 37 languages. It remains controversial, but it’s the bestselling environmental book of all time. More than 50 years after its publication, Behrens says that some of the projections made in the book’s do nothing scenario have proven to be correct. That’s not great news for the planet, but it does speak to the resiliency of the system dynamic model that they developed. That’s no small accomplishment given the limited computer capacity and the shortage of data the authors had to work with. Today, neither of those limitations apply. We are experiencing an explosion in our predictive capacity and in our confidence that we can get those predictions right.

Eric Siegel:

I’m Eric Siegel, and I’m the author of Predictive Analytics, the Power to Predict Who Will Click, Buy, Lie or Die.

Walter Isaacson:

Eric Siegel’s enthusiasm for predictions began at a young age. A family friend introduced him to artificial intelligence, which led him to study machine learning in graduate school. Machine learning basically looks for patterns inside large sets of data and uses those patterns to make predictions about the future.

Eric Siegel:

I mean, prediction as a capability is the holy grail for driving all sorts of large scale operations. That is to say many, many micro-decisions on a case by case basis. If you can predict what the outcome or behavior’s going to be, then you can optimize what to do about it.

Walter Isaacson:

Siegel left academia in 2003, and since then he’s been writing books, lecturing and advising a wide range of clients on the game-changing potential of machine learning or what he calls predictive analytics. What distinguishes predictive analytics from the kind of forecasting that companies have been doing for decades is the ability to drill down and predict behavior on an individual level.

Eric Siegel:

When people use the word forecasting, they’re talking about, “Hey, over this whole population, how many ice cream cones are we going to sell? Are more people going to vote democratic than Republican?” Whatever the overall prediction across a large number. Whereas predictive analytics, since it’s predicting for each individual, it’s which individual is most likely to buy an ice cream cone? Which individual is most likely to vote for candidate A rather than candidate B? It’s on that per individual level, and that’s very actionable, right? That’s valuable for operations because each prediction directly informs the action, the treatment taken for that individual.

Walter Isaacson:

One area where the predictive power of machine learning has proven to be particularly useful is advertising.

Aaron Andalman:

There’s a rule in advertising, it’s called the Wanamaker rule, but it’s sort of the idea that for every $2 spent in advertising, one is wasted, but you just don’t know which.

Walter Isaacson:

Aaron Andelman doesn’t have a lot in common with John Wanamaker. Andelman is a neuroscientist and a specialist in artificial intelligence. Wanamaker was a pioneering merchant when the 1870s opened one of America’s first department stores in Philadelphia. What the two men’s share is a quest to unlock the secret of advertising, to be able to predict consumer behavior so effectively that every dollar spent on advertising will be a dollar well spent. Wanamaker relied mostly on gut instinct to predict how consumers might respond to his advertising. Andelman, who is the chief science officer and co-founder of a company called cognitive.ai, relies on large artificial neural networks.

Aaron Andalman:

One thing that artificial neural networks are capable of is understanding human content in a way that no prior machine learning or computer technology could. Really one of the powers of this technology is its ability to understand what’s in an image or understand what a paragraph of text is about in a much richer and more profound way than earlier machine learning technologies. That has implications in lots of industries, but in advertising, that seems particularly potent because if I can understand what a particular webpage is about, I can get a deeper insight into what a user’s interests are or where a user is in their search for a potential purchase. By having that deeper understanding, our thesis was that’s going to allow more accurate predictions about where ads will be effective.

Walter Isaacson:

Advertising today bears little resemblance to the martini soaked industry depicted in the TV series Madmen. These days, computers make decisions about where and when to place an online ad and how much that ad is worth, and they do it all in a fraction of a second. Marketers have access to enormous amounts of data about the people viewing their ads, but figuring out how to use that data to reach more potential customers has been a challenge. That’s what the cognitive.ai’s customized deep learning algorithms or designed to do.

Aaron Andalman:

We try to build causal models, and by that I mean for every ad opportunity we try to predict how likely the user who would see that ad is to perform some action if we don’t show them the ad, and also if we do show them the ad, and then we try to use the difference between those predictions to decision on. Fundamentally, we’re trying to make two predictions. What will happen if we don’t show the ad? What will happen if we do? If there’s a difference between those predictions, then that’s a potentially valuable impression for our client or our partner.

Walter Isaacson:

Despite the sophistication of its algorithms, consumers remain unpredictable and it’s still not possible to know whether every ad dollar will have its intended effect. While solving the Wanamaker riddle remains a work in progress, there’s another more profound riddle that has kept people up at night for all of human history; When will I die? Today, we can feed a computer images of millions of skin lesions, and chances are it’ll do a better job of predicting which ones might be cancerous than even the most experienced radiologist. But now a team of American researchers is taking the idea of predictive medicine into uncharted territory. Early detection of disease is one thing. Early detection that someone might be contemplating suicide is something entirely different.

Ben Reis:

With suicide, we have the opportunity to really save lives with computers.

Walter Isaacson:

Ben Rice is a director of the Predictive Medicine group at Harvard. Rice and his colleagues were looking for ways to harness the predictive potential contained in the vast amount of data that can be extracted from patients’ medical records. They were looking to make predictions that would be both actionable and preventable, and they found that, much to their surprise, they could do that with suicide.

Ben Reis:

Once we started running our models on these large medical data sets, we found that the computer was able to identify between a third and a half of all suicide attempts on average three years before they took place. Now, this was very surprising, including to some of the suicide researchers that we work with who are specialists in the field.

Walter Isaacson:

Rice says that many people contemplating suicide fall through the cracks because doctors don’t always know what to look for. A patient might come in complaining of stomach pains. The doctor will treat the pain without realizing that there are thousands of factors that end up being predictive of suicidal outcomes, and stomach pain happens to be one of them.

Ben Reis:

We noticed that in some situations there were certain gastrointestinal syndromes, so stomach pains, that were associated with suicidal risk. Now, that doesn’t necessarily sound like a logical connection, but we all know that stomach pains can be related to stress. Even though it’s not necessarily causative, it may not even be correlated, but it’s kind of one of the first things that might be noticeable if you’re looking at it from this different perspective, which is the clinical record. But the bottom line is that this is very much a gestalt kind of approach where the computer adds up thousands of different facts about each person, and if they all add up to a high risk signal, then it alerts the clinician to take another look.

Walter Isaacson:

Rice says his team has also developed markers to assist doctors in identifying child abuse long before any physical evidence of abuse might be visible. He claims their models can identify more than half of all cases of abuse an average of two years before the medical system could identify them. The model uses anonymous data, so a doctor won’t know if the patient in front of them might be contemplating suicide or suffering from abuse, but they will know what factors they should be looking for.

Ben Reis:

This computer can serve as kind of an assistant to the doctor basically saying, “Hey, doc, this person coming in for this next appointment right now, statistically speaking may fall into a very high risk category. So take an extra couple of minutes and talk to this patient. Try to see if, using your clinical skills, there might be some risk there. Maybe call in a consult, a social worker, a psychologist”, et cetera. But the idea is that using this screening support system, we could prevent more people from falling through the cracks and hopefully identify people who are in need much earlier than we do now, and in many cases identify them before it’s too late.

Walter Isaacson:

If it sometimes feels like artificial intelligence is being used to predict everything these days, you aren’t wrong. We’re not only using AI to predict consumer behavior and the likelihood of developing different diseases and mental health issues. We’re also using it to predict where and when serious crimes might happen in your city. Efforts to use AI as a crime prediction tool have been ongoing for many years, but these algorithms have been controversial, plagued by charges that so-called predictive policing unfairly targets some groups and neighborhoods.

Ishanu Chattopadhyay:

You cannot reduce the complexity of the social interaction between humans, law enforcement, social factors, all of that to one equation. That’s terrible idea.

Walter Isaacson:

Ishanu Chattopadhyay is a professor of data science at the University of Chicago. He studies the use of predictive policing algorithms.

Ishanu Chattopadhyay:

People try to just take all the data and come up with some features, saying that maybe this is one of the characteristics that is driving crime. For example, maybe the amount of gravity on the walls is something that predicts crime. Let’s kind of include that as a feature in our machine learning system. The issue with that is you are handpicking the features, so even if you think that I have picked every possible thing and have not incorporated any bias, you can’t be sure about that.

Walter Isaacson:

To combat this problem, Chattopadhyay and a team of data and social scientists had developed a new predictive algorithm that doesn’t rely on human inputs that can often skew the results. Instead, it divides the city into spatial tiles and looks at public data on crime in those areas.

Ishanu Chattopadhyay:

It’s like you kind of put a special grid on the city. You break it up into couple of city blocks, just like a tile, just kind of put a grid on it, and then you sit down on any of those grids and kind of see what events are happening there. Then you see how many crimes or different types are happening in each day, or maybe nothing happened. If you’re looking at violent crimes, then you get a time series for violent crimes in that particular tile. If you’re looking at property crimes, you’ll get a time series for that tile, and you do that for the entire grid. You’ll have this kind of thousands of different time series that you can observe. Then what the algorithm really does is figure out how does different time series interact with one another and constrain one another, and once you get those models out, you can kind of combine them to make future predictions.

Walter Isaacson:

Chattopadhyay and his colleagues have applied their model to Chicago and several other American cities. They say it was able to predict future crimes one week in advance with about 90% accuracy. That can be incredibly useful for residents and law enforcement, but Chattopadhyay insists that prediction is not the end goal of their research. He’s more interested in using his model to influence policy decisions.

Ishanu Chattopadhyay:

Really what I would like to see is this tool to be used as a demonstration that now with all the data and the AI that we can have, policy makers don’t have to make policies based on what they feel in their guts. We can actually optimize policies based on data, based on prediction, so a more scientific approach to governance.

Eric Siegel:

Yes, we can predict from data, but no, we cannot predict like a crystal ball. That’s not credible. We don’t have clairvoyant capabilities as humans nor as machines.

Walter Isaacson:

Eric Siegel literally wrote the book on predictive analytics. It’s been the main focus of his work for more than 20 years, which is why it’s interesting that these days, what he’d like us all to do is slow down, take a deep breath, and get realistic about what predictive algorithms can and can’t do. No matter how many millions of data points you feed into artificial neural networks, the only thing you can really predict with certainty when it comes to human behavior is unpredictability. Or, as a Nobel Prize winning physicist Niels Bohr liked to say, prediction is very difficult, especially if it’s about the future.

I’m Walter Isaacson and you’ve been listening to Trailblazers, an original podcast from Dell Technologies, who believe there’s an innovator in all of us. If you want to learn more about the guests in today’s episode, please visit delltechnologies.com/trailblazers. Thanks for listening.

In the late nineteenth and early twentieth centuries, Evangeline Adams was the most famous fortune teller in America. She was known as the “seer of Wall Street” and had her own syndicated radio program. But Adams wasn’t the only one predicting the future in nineteenth century America. Government agencies such as the National Weather Service and the U.S. Department of Agriculture made predictions based on science. But it wasn’t until the advent of computer technology that our ability to make data driven predictions advanced. Today, instead of relying on the stars, researchers use predictive models and deep learning algorithms to see what outcomes could occur in different scenarios. Hear what’s in the cards for prediction on this episode of Trailblazers.

In the late nineteenth and early twentieth centuries, Evangeline Adams was the most famous fortune teller in America. She was known as the “seer of Wall Street” and had her own syndicated radio program. But Adams wasn’t the only one predicting the future in nineteenth century America. Government agencies such as the National Weather Service and the U.S. Department of Agriculture made predictions based on science. But it wasn’t until the advent of computer technology that our ability to make data driven predictions advanced. Today, instead of relying on the stars, researchers use predictive models and deep learning algorithms to see what outcomes could occur in different scenarios. Hear what’s in the cards for prediction on this episode of Trailblazers. Jamie Pietruska

is an Associate Professor of History at Rutgers University-New Brunswick, where she specializes in US cultural history and the history of science and technology. She is the author of "Looking Forward: Prediction and Uncertainty in Modern America" and related essays on histories of forecasting and information.

Jamie Pietruska

is an Associate Professor of History at Rutgers University-New Brunswick, where she specializes in US cultural history and the history of science and technology. She is the author of "Looking Forward: Prediction and Uncertainty in Modern America" and related essays on histories of forecasting and information.

Eric Siegel

is the Bodily Bicentennial Professor in Analytics at UVA Darden School of Business, a leading consultant, and the founder of the long-running Machine Learning Week conference series. Eric authored the bestselling "Predictive Analytics: The Power to Predict Who Will Click, Buy, Lie, or Die", which has been used in courses at hundreds of universities.

Eric Siegel

is the Bodily Bicentennial Professor in Analytics at UVA Darden School of Business, a leading consultant, and the founder of the long-running Machine Learning Week conference series. Eric authored the bestselling "Predictive Analytics: The Power to Predict Who Will Click, Buy, Lie, or Die", which has been used in courses at hundreds of universities.

Aaron Andalman

is the Chief Science Officer and Co-founder of Cognitiv. At Cognitiv, his team of scientists develop custom algorithms for clients based on the latest advances in deep learning and machine intelligence.

Aaron Andalman

is the Chief Science Officer and Co-founder of Cognitiv. At Cognitiv, his team of scientists develop custom algorithms for clients based on the latest advances in deep learning and machine intelligence.

Ben Reis

is Director of the Predictive Medicine Group at Harvard Medical School. He explores the fundamental patterns of human disease, and harnesses artificial intelligence to predict diseases years in advance.

Ben Reis

is Director of the Predictive Medicine Group at Harvard Medical School. He explores the fundamental patterns of human disease, and harnesses artificial intelligence to predict diseases years in advance.

Ishanu Chattopadhyay

is an Assistant Professor of Medicine at the University of Chicago. He’s an expert in artificial intelligence, machine learning, and the computational aspects of data science. Chattopadhyay and his team have developed AI that is able to predict future crimes one week in advance with about 90% accuracy.

Ishanu Chattopadhyay

is an Assistant Professor of Medicine at the University of Chicago. He’s an expert in artificial intelligence, machine learning, and the computational aspects of data science. Chattopadhyay and his team have developed AI that is able to predict future crimes one week in advance with about 90% accuracy.