Gen-Z, an open-systems interconnect designed to provide memory semantic access to data and devices via direct-attached, switched or fabric topologies, is quickly becoming the industry standard. In October 2016, twelve industry leaders came together to lead the charge in creating and commercializing this new data access technology. The Gen-Z Consortium consists of like-minded members dedicated to developing an open ecosystem that will benefit the entire industry.

In less than two years, the Gen-Z Consortium has already achieved numerous accomplishments, including:

- Published the Gen-Z specification, the product of 50+ companies seeing the value in the vision and working to put it into practice.

- Converged on a high-speed connector and had it adopted by other standards bodies

- Adopted pluggable modules that will allow easy expansion and configuration of high-speed resources

- Created demos to show how Gen-Z can be used to create a fully composable solution

- Created design and validation IP

- Started design of dedicated Gen-Z chips

- Started the design of composable systems using the Gen-Z fabric.

By working together, the Gen-Z Consortium can examine the future needs of the IT industry and create positive forward change for all. Now that the fields are plowed and planted it’s time to consider when and how Gen-Z will impact you.

First off, why do you need Gen-Z?

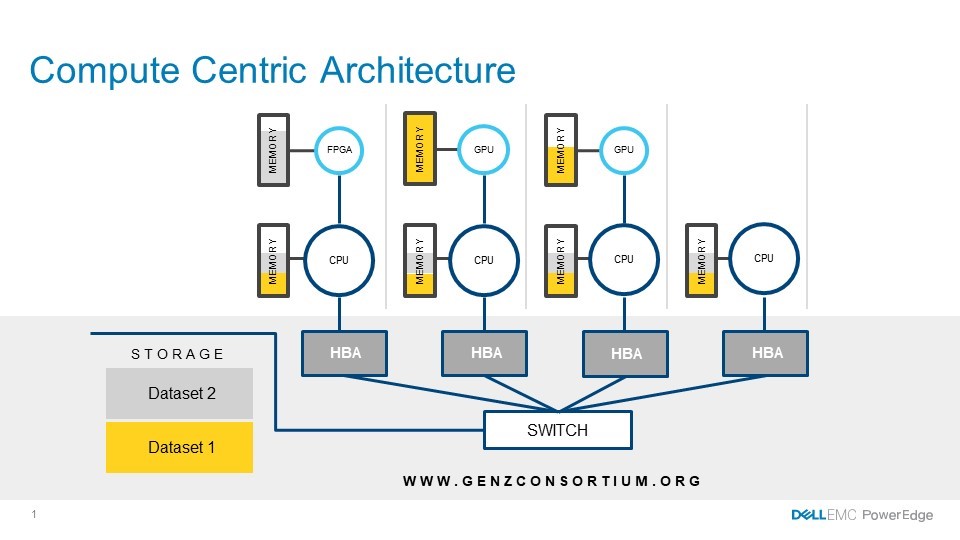

The message hasn’t changed since the Consortium launched: it’s all about the data! If you consider today’s CPU centric architecture (figure 1) you notice that data continues to get copied every time a new actor wanted to transform the data. For instance, the CPU copies data from storage to its memory (or uses RDMA to do the copy), then the data is copied again when a GPU or FPGA wants to work on the data, and it is copied back to the CPU memory once this work is complete. That’s a lot of time and energy spent on copying and moving data that does nothing to get to the answers and insights wanted by the business.

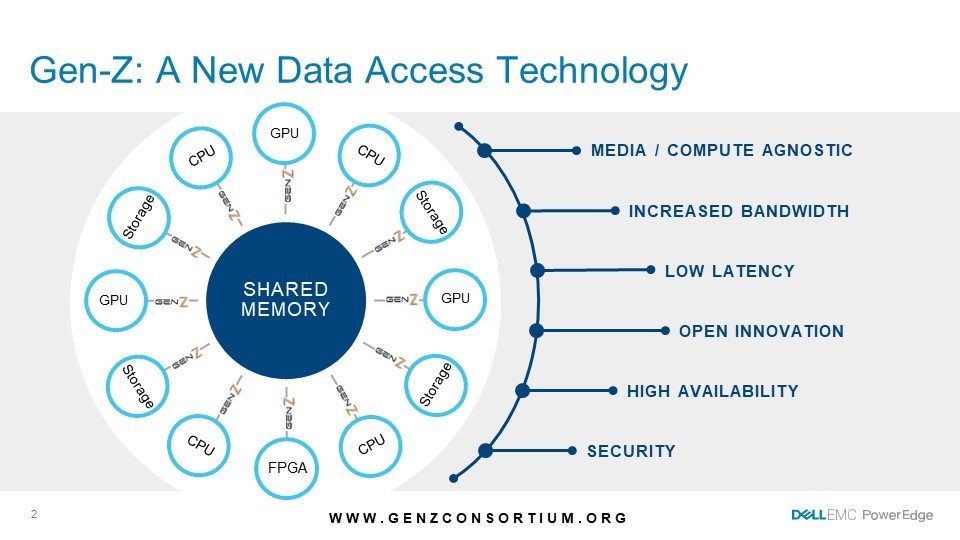

Now consider figure 2. Here you see a pool of memory that is shared by all of the resources, providing every compute engine equal rights to the data. This allows the application the opportunity to use the best technology for the task at hand without requiring the data copies. This reduces the overall time required to get the answers your business needs, deal with a cyber-security threat, or execute a financial transaction.

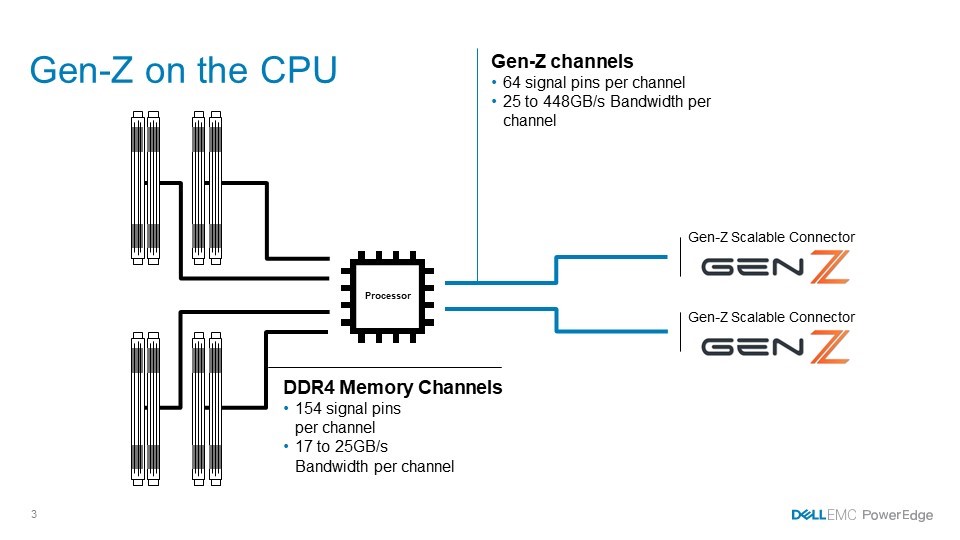

Let’s consider the CPU for a moment. Processor vendors continue to add cores to their designs at a good clip but they haven’t been able to add memory bandwidth at the same rate and now the memory bandwidth per core is half what it was in 2012. The reason for this dramatic drop is the number of pins required to add a new memory channel (it takes a new memory channel to add more bandwidth at a given frequency). For DDR4, the CPU vendor needs to add 154 signal pins for each new channel and balanced designs require adding 2 channels at a time, a large burden for devices that are already near 4000 pins.

Looking at figure 3 you can see that Gen-Z can add more memory bandwidth for fewer pins and these pins can be shared with the PCIe pins so it does not increase the pincount at all. This gives the customer the opportunity to optimize the affective memory bandwidth to meet the needs of the application(s) they are using. Figure 3 shows that four DDR4 channels give you a bandwidth from 68 – 100 GB/s using 616 signal pins. In future designs, the CPU can add an addition 50 – 896 GB/s by sharing 2 PCIe root ports with Gen-Z. Clearly, a lot more bandwidth for the hungry cores that will be added.

The Gen-Z fabric has the performance required to fully compose a systems from pools of resources. Looking again at Figure 2, you can imagine a rack that has a number of CPUs in a chassis, a box of GPUs, a container full of memory, a variety of FGPAs, and storage elements in it. Using management software, you can allocate some of these resources to build a true bare metal server that can be deployed in minutes. This flexibility also allows you to add and remove resources as requirements shift thus reducing the problem many IT departments have with trapped resources (resources stuck in a server that aren’t available to other applications without a large redeployment effort).

The question I get most often after talking about Gen-Z is, “When will this be available?” Dell EMC and the members of the Gen-Z consortium are actively working on offerings and you can expect to see them in 2020. Join us at the Gen-Z booth during either Flash Memory Summit in August 6–9 or Super Compute in November 11–16 to see demos of the technology and learn more about all of the features and benefits this fabric might offer to your company.