Storage outages can be expensive or even catastrophic. In fact, the impact of storage downtime is becoming increasingly critical as companies leverage virtualization to drive higher efficiency in their infrastructure. The situation IT managers face is the desire to drive up virtual machine density in their servers, but the more virtual machines you have depending on a particular storage container in the disk array, the greater the impact to the business when that data becomes inaccessible.

Storage outages can be expensive or even catastrophic. In fact, the impact of storage downtime is becoming increasingly critical as companies leverage virtualization to drive higher efficiency in their infrastructure. The situation IT managers face is the desire to drive up virtual machine density in their servers, but the more virtual machines you have depending on a particular storage container in the disk array, the greater the impact to the business when that data becomes inaccessible.

From another perspective, eliminating storage downtime can open up opportunities to drive much greater infrastructure efficiencies with virtualization. With the risk of workload downtime off the table, this means that IT managers can maximize virtual machine density in their servers—in effect, ratchet up efficiency from the compute resources in the infrastrucuture—without increasing business risk inherent with downtime. What’s more, they can proliferate virtual infrastructure more broadly across applications that are business-critical, where any downtime would be especially punishing to the business.

If you think about all the things that could happen to disrupt access to data, these are usually grouped into a few common buckets, for example: natural disasters like fires, storms, and earthquakes; system failures from things like hardware breakage or operator mistakes; power and infrastructure outages like power grid contingencies (e.g., rolling power outages) and network link failues; and finally, maintenance and upgrade operations like upgrading, replacing and adding new hardware, updating and adding new software, and migrating workloads. Different elements of a disk array can sustain system uptime or preserve access to data through these various situations, for example, redundant, hot-swappable hardware subsystems; array controller failover/failback; synchronous data mirrors, etc. Data availability reaches across all these system elements collectively, but continuous data availability requires a special additional capability that enables workloads to synchronously coexist and be accessible on different arrays at different locations. This is where Dell’s Live Volume software, a feature of the SC Series operating system, comes into play. It ensures that virtualized applications never lose data access, no matter what contingency arises.

Until recently, getting a storage system that could deliver zero workload downtime meant huge investments in monolithic frame arrays—those are the arrays that require a specialized staff of highly trained experts and have deployment costs that exceed the budget of the majority of companies on the planet. But things are changing now. While most of the newer all-flash and hybrid-flash architectures out there do not currently support this capability, you will find today that several of the more mature enterprise-class disk array platforms from leading IT vendors—including the Dell Storage SC Series arrays—now have capabilities for continuous data availability. But what you will also find is that there are significant differences in the way these leading disk arrays work to provide continuous availability. These differences can have substantial impact on how effectively businesses can leverage continuous data availability to add value and increase efficiency in the infrastructure.

Some of the differences are quite obvious. For example, some disk arrays rely on separate add-on systems (sometimes from third-party providers) to deliver continuous availability. This means extraneous hardware and software footprint, disparate management and spearate service contracts, etc. The complexity and cost of these add-on solutions can be significant compared to array-based continuous availability software like Live Volume.

Differences across other solutions are not quite as obvious, but can still disadvantage customers. For example, some solutions provide continuous availability as a blanket SAN-wide function, requiring zero-downtime SLAs for every volume configured. But what about tier-2 and -3 applications that do not require that level of uptime? With these solutions, customers may need to deploy different SAN silos dedicated to different tiers of storage, which is definitely not a progressive approach to efficiency.

You will find yet more differences across the solution spectrum that disadvantage customers in different ways, for example:

- Incomplete GUI support and a usability environment that generally assumes specially trained operators.

- Incomplete functionality that exposes downtime gaps for things like data migrations and lifecycle upgrades.

- Incomplete automation and lack of self-healing across the failure domain, which makes failback after the environment is normalized risky and challenging.

- Rigid operational parameters that prohibit mixed replication strategies and customizable SLAs for different workloads, or prohibit changing SLAs on the fly.

- Software licensing terms that continually inflate TCO by requiring periodic renewal or re-licensing with equipment upgrades.

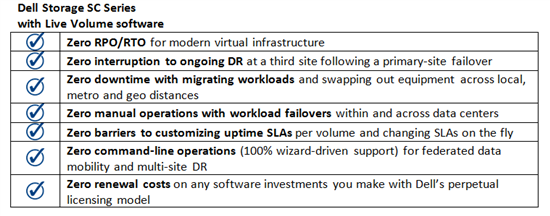

Across the spectrum of business continuance solutions from the leading storage vendors, except for Dell, you will find some, or several, of these limitations in the continuous availability solutions they offer for their enterprise disk array platforms. With Live Volume software for SC Series arrays, Dell delivers business continuance capabilities that fulfill zero workload downtime without any of these disadvantages. With Live Volume and SC Series storage, virtualized workloads are completely “zero-proofed.”

Live Volume is available for Dell Storage SC9000, SC8000, SC4020 and S40 arrays and works in federated clusters of mixed-model SC Series arrays. Also, Live Volume supports all major virtualized server components, including VMware® vSphere®, Microsoft® Hyper-V®, Citrix® XenServer® and Oracle® VM environments. Read more about Dell Live Volume software here.