Welcome to Inside Flash, your connection to Flash Technologies here at EMC.

Traveling as part of EMCs Flash Business Unit, I get the same types of questions all the time. “What’s the VFCache Installation process?”, “Is it really that easy?” or “What do I need to do to my storage array and application?” Let me start by saying… Yes, it is that easy. To prove it, I spent some time with Scott Lewis. Scott is part of EMCs Emerging Technology Division and has been helping our customers experience the effects of EMC VFCache since its introduction at EMC World 2011.

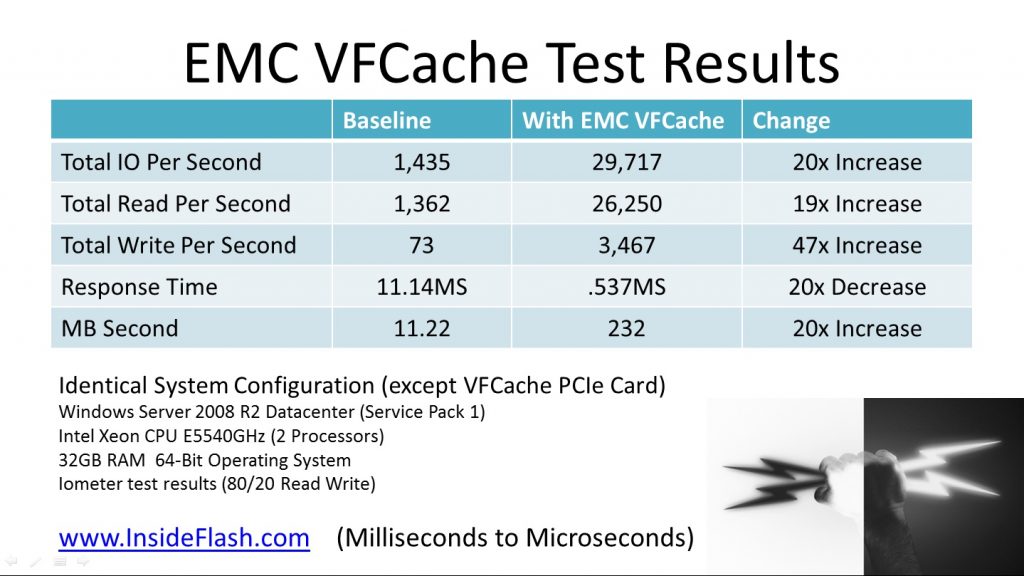

Scott and I found two identical servers in one of many EMC labs (Detroit, Michigan this time). When I say identical, they are exactly the same. (Windows 2008 R2 Data Center, SP1 (64-Bit), 32GB RAM and Intel Xeon CPUs, both Fiber Channel Attached to an EMC CX Array). The only difference: One has a VFCache PCIe Card and VFCache software. The other does not.

In this Episode of “Inside Flash”, Scott and I stepped through the process of installing the hardware and software. Install the hardware, install the VFCache software (reboot), enable the card, enable the Lun (Windows Drive) to be accelerated, and that’s it. Then we ran Iometer with the same perimeters against both servers. What changes did we do to the application? None!!! What changes did we make to the Storage Array? None!!! The results speak for themselves. 20x increase in IO per second and 20x decrease in response time!! That’s the VFCache WOW Factor!

Most interesting to me is the 47x increase in Write IO. The VFCache value prop has always been performance AND protection. Accelerate Read IO while protecting applications by keeping Write IO consistent to the underlying storage infrastructure. Writes are acknowledged from the storage array before the Write acknowledgement is returned to the server. (This is a very important part of VFCache, and a topic for another Blog. Side note: a card configured as a split card will immediately acknowledge the Write and is a perfect fit for SQL TempDB among other temporary data sets). This storage consistency allows EMC customers to maintain the value associated with the Enterprise Storage Network. Local replicas, remote replicas, continuous data protection are all maintained while VFCache increases Read IO at the host, providing performance AND protection.

So why did Write IO increase 47x? The implementation of VFCache moves Read operations closer to the application. Specifically, Read IO happens on the PCIe bus and not on the storage area network. In this case, this allowed the EMC CX array to leverage Write Cache while Reads were processed at the host. The net result… increased Read and Write operations.

Increased IO is great, but what about response time? Response time dropped from 11Millisecond to 537Microseconds (or .537 Milliseconds). Remember, we made no changes to the storage or the applications. Install the card, install the device drive, enable drives as Cache devices, and that’s it!!!

Don’t believe me? Let me help you see for yourself. Contact me directly at sam.marraccini@emc.com and thanks for watching insideflash.emc.com. See you next time, in the Flash lane!!!