July 2012 marked a pretty significant milestone for me. It was the official 10-year anniversary of my first hypervisor installation (VMware ESX 1.5.2 for those interested). I can still remember the fear in the sales rep's voice over the phone the first time I called and said "I'm interested in buying 400 processors." While I didn't know it at the time, I was doing something revolutionary, and for the last decade I simply haven't stopped pushing that envelope. As Dell prepares to arm its customers with the technology needed to tackle the evolutionary changes occurring within their data centers, I wanted to take a quick look back at the past decade in computing to help highlight why these future changes are necessary in the first place.

The Introduction of Virtualization

Virtualization was, and to this day continues to be, a breath of fresh air into a highly inefficient x86-based IT model. IT had fallen into a habit of over-provisioning and under-utilizing. Any time a new application was needed, it often required multiple systems for Dev, QA and Production use. Take this concept and multiply it out by a few servers in a multi-tier application, and it wasn't uncommon to see 8-10 new servers ordered for every application that was required. Most of these servers went highly underutilized since their existence was based on a non-regular testing schedule. It also often took a relatively intensive application to even put a dent in the total utilization capacity of a production server.

All of this exposed IT to scrutiny in how it was negatively impacting, and, ultimately, forcing the business to consistently make compromises.

All of this exposed IT to scrutiny in how it was negatively impacting, and, ultimately, forcing the business to consistently make compromises.

1) Business missed deadlines and couldn't respond fast enough to customer demands

2) Insufficient IT budget to deliver against the most critical business initiatives

3) Trade-offs must be accepted to receive resiliency and performance for applications and IT services

After considering not only the capital expense of the new hardware, but also the operational expenses to keep it all running, it was clear that IT was spending a lot of money and getting very little return.

Enter virtualization. Virtualization fundamentally started as a way to get control over the wasteful nature of IT data centers. This server consolidation effort is what helped establish virtualization as a go-to technology for organizations of all sizes. IT started to notice CapEx savings by buying fewer, but higher powered servers to handle the workloads of 15-20 physical servers. OpEx savings was realized through reduced power consumption required for powering and cooling servers. This initial push into virtualization was the hook to get the technology looked at, but wasn't what really established virtualization as the future of the datacenter.

Six to Seven years ago, we saw what I feel truly cemented virtualization as "the future." It was the realization that virtualization provided a platform for simplified availability and recoverability. Virtualization offered a more responsive and sustainable IT infrastructure that afforded new opportunities to either keep critical workloads running, or recover them more quickly than ever in the event of a more catastrophic failure. Gone were the days of scheduled downtime for remedial updates, strict hardware dependencies, dealing with the "24-Hour Restore Battle" and week-long off-site DR exercises. Traditional x86 IT had been dealt a knockout blow, and virtualization was the new champion.

Speed Bumps towards Adoption

Although it had been crowned as the new king of the data center, virtualization quite quickly became a victim of its own success for several reasons.

Although it had been crowned as the new king of the data center, virtualization quite quickly became a victim of its own success for several reasons.

First, it actually did its job too well. The simplicity of being able to deploy new workloads gave the false impression to business and IT consumers that servers were now "free." This led to a significant increase in requests for new servers. The thought process was that a large number of these new requests would be temporary in nature, but IT didn't yet have a way to actually track and manage the lifecycle of these VMs to properly reclaim capacity. In talking with customers over the past few years I've heard horror stories of organizations that are provisioning more than 300 VMs/Month. Yes, 300 per MONTH. They have 3-4 administrators doing nothing but provisioning and configuring 13 VMs per day every day, not to mention tracking and decommissioning unused workloads to reclaim vital capacity. Imagine what any IT organization could do if these resources weren't focusing on these mundane tasks, and were instead able to focus on more strategic initiatives.

Note: Click on the cartoon on the right to see a larger version.

This leads us to the second reason for challenges in transitioning to the new data center model; the tooling was, and to this day remains, unable to comprehend the changing operational roles within IT. I separate these tools into two camps: The Monolithic Solutions, and the Point Products.

- Monolithic solutions are more prevalent in large enterprise organizations and require visibility into entire IT infrastructures. This includes many aspects of legacy infrastructure that simply won't go away (mainframes). These monolithic solutions have to accommodate the lowest common denominator, which unfortunately prevents them from fully adapting to the significant shift across the broader IT space. They simply aren't enabling the business to turn the corner and understand how IT could be helping the business become more dynamic and competitive in the market.

- Point products are focused on just a small aspect of IT, and rarely consider the bigger picture of what's happening in the data center. Now don't get me wrong, there are some fantastic point products out there that offer more than enough capability for a segment of the market (normally the SMB space). The problem lies when these organizations begin to mature and really adopt these new data center concepts. At some point, these point products fail to provide enough capability and leave the customer with a gap in their solution or force them to piece together multiple point product solutions with limited integration with each other.

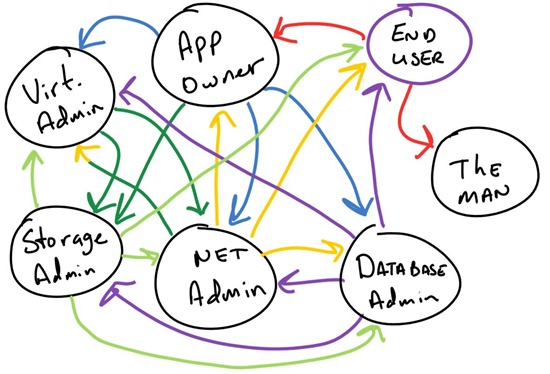

This, of course, leads us to the third and final challenge that is prevalent in today's data center; reduced responsiveness from IT. With a lack of proper tooling and in influx of inefficient IT resource allocation, the business in general is having a hard time buying into the broader "agility" benefits that are supposedly provided by the next iteration of data center, the private cloud. There is a significant amount of time wasted on manual processes from individual resources. Combine resource churn with the dependencies across the multiple IT Silos and we have a highly inefficient IT organization in a world where IT efficiency gives companies a significant competitive advantage in the market. These inefficiencies compound internally and ultimately turn into a giant game of "blame someone else" instead of providing a collaborative environment in which IT is seen as a business asset vs. an impediment to being more agile than the competition. I've used the "Wheel of Blame" several times in the past, and am still amazed at the number of customers that ask how I got my hands on their internal org chart.

Dell's Approach to the Data center Evolution

Over the next few weeks you will be exposed to Dell's approach to enabling a simpler IT operational model through our Converged Infrastructure strategy (Don't worry, we'll be going into more detail on exactly what we consider "Converged Infrastructure" in our next blog post). Simply put, Dell is looking to help organizations collapse and automate critical administrative tasks to speed up the routine tasks and free up experts for the higher order needs of the business. Dell will be enabling our customers with several initiatives:

- Innovations in our hardware offerings and how customers can procure these offerings

- Fewer and more intuitive tooling to help IT effectively manage the demands of the business

- Streamline operational processes to minimize the amount of manual and repetitive to help free up critical resources to better collaborate across various technology domains

Stay tuned here over the next few weeks as we continue to educate you on Dell's strategy and subsequent offerings and work with you to understand and adapt to the change that is inevitable in data center management.