Everything you ever wanted to know about IoT (Internet of Things) in one comprehensive essay.

Over the last five years, the term IoT has been ever-present among manufacturers, contractors and developers in their offerings to customers. When one tries to examine the reality of that mysterious IoT, one suddenly discovers that it is a field containing various layers of solutions which sources lie in quite disparate worlds of content.

In this essay, I shall attempt to untangle the term IoT and give an overview of the trends emerging from the traditional contracting market and reaching the ultra-high-tech industry of today. Before we get to the glittering technologies, the server rooms with their colorful, blinking LED lights and decision-making systems – in order to understand IoT we must begin at the beginning.

The origins of the IoT lie in the needs of the control, machine, and ultra-low power markets. Such systems have been all around us for more than 20 years: in public buildings, industry, and agriculture. These systems check processes in a given environment (e.g. a manufacturing floor, a plowed field, a greenhouse, or an entranceway), monitor them in real – or close to real – time, and transmit their data to a computer system which provides the user with precise indications of the status on the ground as well as warnings of deviations from the norm.

This is naturally relevant to a vast range of disparate contexts: process control (sequential or batch) of the manufacturing floor, access control, lighting, transportation, building security using cameras and monitoring and alarm systems, and more. For many years, the field was characterized by machines equipped with programmed controllers connected by networks to various detectors on one side and to the computer system on the other side. In fact, for the last 20 years the field has been quite traditional, and the market has been controlled by contracting companies linked to (or part of) building companies and high-voltage electricity.

To understand the change and to try to predict trends in this seemingly traditional market, we shall try to analyze IoT from four technological and commercial perspectives, each of which is a piece of the IoT jigsaw puzzle.

The sensor: This part is a monitoring component or detector and is located at the end point. In the beginning, this was an analog electrode with the ability to measure various parameters (e.g. temperature, light, humidity, acidity, and so on). The electrode’s output consisted of electrical tension (i.e. voltage) that was translated into a measurement. These electrodes were connected to programmed controllers that determined the sampling rate, the opening or closing of the electrical signal, and the transmission of the data to a computer system. The tremendous change in this field occurred along two main axes:

- The variety of sensors and their adaptation to innumerable contexts: Over the years, the market of end-point monitoring components doubled each year in terms of variety and technological capabilities (some being all-purpose components that can be assimilated into every type of environment, some being designated for vertical markets, such as the home market, the vehicle market, the aviation market, etc.).

- The cost of sensors: The cost of sensors as relatively simple, mass-produced components has been dropping and is just a few dollars apiece (compared to the cost of a temperature electrode that cost hundreds 10 years ago). Furthermore, connecting them to a programmed controller is no longer always necessary: the sensors themselves contain a communication component and it is very easy to connect them to a communication network (wired or wireless).

The trend in the sensor market is one of exponential growth. The number of monitoring components we connect to communication networks will continue to grow and prices will continue to drop. In taking the long-term view, we can say with a high degree of certainty that monitoring components will become an inseparable part of every environment in every context: from sensors permanently connected to the human body to monitor health indicators (pulse, oxygen saturation, glucose levels) to home sensors (e.g. every electrical appliance will be integrally fitted with a sensor that can connect to the home network). Sensors will cover our streets, roads, cars, and workplaces.

The gateway, i.e. communication network: The number of components we connect to private and public networks is huge and is constantly growing. Our communication networks, too, have undergone changes to support that growth.

- The growth of gateway types: In the past, a specifically designated wired network was an inseparable – and expensive – part of a classical control project. Today, the gateways are very varied, consisting of wireless and cellular networks using different technologies; there are even networks installed on existing wired infrastructures (such as the electrical grid). The costs of the infrastructures and of the communications providers are decreasing.

- In the past, the communication protocols were relatively outdated and not at an acceptable standard (e.g. RS23, 485). The breakthrough came in the ability to connect sensors to “standard” communications not just as an engineering standard but also in the configuration of code interfaces that make it possible for sensors to communicate with each other as a decentralized network. Furthermore, the field currently suffers from an abundance of interfaces due to a huge number of manufacturers. We may expect that, in the near future, there will be a gradual transition to simpler communication interfaces between different sensor worlds until a unified language emerges to become the industry standard.

The Fog: Fog computing, or fogging, is a relatively new term not found in the context of classical control. Rather, the fog relates to the part between the sensor and the computer system receiving and processing the data. Let us take, for example, a fighter jet flying a mission. The plane is equipped with dozens of various sensors that, at every given moment, sample every parameter of the flight path in real time (altitude, engine status, temperatures, pressures, fuel level, arming status, etc.). These data represent a tremendous amount of information in real time, but only a small portion is sent to ground control. The fog, then, is a system that makes simple decisions of filtering the information and transmitting it to the computer system because the sheer volume of information gathered cannot be sent fast enough to the processing center. The fog is an optional component in an IoT system: it is not part of the traditional control system. The exponential growth demonstrated needs a component in the system that is capable of making simple decisions on filtering or adjusting the sampling rate and transmitting just part of the information to the next stage.

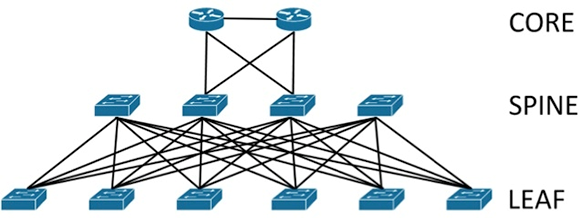

The data center: In the past, the data center – also known as the back end – which is the computer system receiving all the data from the control system, was relatively simple and comprised a single computer receiving all the data in real time from the system of sensors distributed in the field. Today, we realize that the flow of raw data (even after the fog’s filtering) is enormous and requires a suitable computer infrastructure (the computing capacity increases as communication grows increasingly rapidly and storage systems become larger and more sophisticated). Growth, or scaling, is a topic worthy of its own essay and cannot be fully discussed herein, but it is obvious that the huge amount of information requires handling differently, other than the traditional methods. It needs hardware that can support rapid growth and change, and non-relational databases that can receive a lot of information from several sources simultaneously. What is worth noting and elaborating on, especially if we wish to predict global trends, is the applicative part: the world of applications is actually the part that, at the lowest layer, handles information and presents the conclusions, warnings, or insights for the end user. We can expect the leap here to be the biggest. Disparate information sources will funnel data to a computer system that will receive and filter it. From this point on, smart work will be done to analyze the information. Correlation engines that know how to draw conclusions from disparate contexts will constitute the biggest breakthrough of all in the field.

Let us use an example, such as a “traditional” home-based IoT system consisting of a warning and alarm system receiving indications of pressure exerted on the windows and attempts to open the door or bend the security bars. The information from the sensors is funneled to a computer system that makes a real-time decision to activate all security cameras in record mode, send a warning to the resident, and call the police because it would seem that there is a B&E in progress.

Other examples could be real-time changes in traffic lanes to reduce congestion, or facial recognition systems deciding what content – advertising, work-related, or other – to show us. Our last example is a system that monitors our bodies and is able to make a decision based on accelerated pulse rate, perspiration, or reduced blood oxygen, issue a warning that the user is experiencing a medical emergency, and also summon the EMTs. On the other hand, the same symptoms might appear if our favorite sports team is about to lose a critical match. Therefore, a computer system sampling a large number of people at every given moment could learn what constitutes a normal reaction and differentiate it from a medical emergency based on a sampling consisting of the whole population.

This is just the tip of the iceberg of the systems referred to as artificial intelligence (AI), which are also on the increase. In these systems, the learning algorithm is a turning point capable of undergoing changes in relation to the amount of information the system takes in.

Another topic that must be given special attention is information security. Naturally, once the number of distributed sensors grows significantly, then invasion of privacy, exploitation of security weaknesses, and cybercrime grow as well. The world of IoT needs special emphasis on security because it affects everything: from the end (the area being monitored) through the gateway to the central computer system. It is therefore critical that sensor developers devote a great deal of attention to information security right from the moment they begin to define the product specifications. The level of security must be maintained from end to end, and include the gateway, the central computer system, and the application. The security solutions needed for this world must be holistic and comprehensive. A link that is weak from a security perspective is a potential point of failure for the entire system.

To summarize: In the near future, the IoT world will make our lives more computerized, smarter, and will simplify complex processes. To prepare for this brave new world, we must meticulously plan our computer systems from end to end and take great care with information security while selecting and implementing suitable applications.